Main Day 2 - Wednesday 5th November

8:00 am - 9:00 am

Registration and Morning Networking

9:00 am - 9:30 am

KEYNOTE PRESENTATION: How to Scale Your AI Tooling, Design & Architecture: People, Platform, Process

Neil Thewlis -

Head of AI and Model Transformation,

HSBC

Led by Neil Thewlis, HSBC’s Head of AI and Model Transformation, this session explores how enterprises can build AI architectures that scale both technically and organizationally. As AI projects move from proofs-of-concept to production, IT leaders must balance quick wins with long-term vision. Neil will share HSBC’s journey to build long-term AI initiatives, focusing on key pillars of people, platform, and process to drive re-useability, portability and scale.

- People, Platform, Process: Leveraging the right talent and culture, robust AI models and infrastructure, and streamlined workflows/governance to support AI growth.

- Building Beyond Short-Term: Assessing how you can avoid falling into the trap of focussing on near-term investments, in favour of longer-term goals and objectives.

- Developing AI solutions with re-useability and portability front of mind and avoiding vendor lock-in utilizing open standards and cloud-agnostic tools will ensure your models and data can move freely as the landscape changes.

- Bridging the gap between technical implementation and business functionality by mapping AI use cases to the right architectural components so that technology choices directly serve business goals.

- Accommodate emerging requirements around scale, control, and agentic by designing with adaptability in mind

9:30 am - 10:10 am

PANEL DISCUSSION: How to Architect Your Generative AI Applications

Our first panel explores the core architectural decisions behind successful Gen AI products and systems, from model selection and customisation to platform engineering, orchestration, and infrastructure optimisation. Attendees will gain actionable insight into building and deploying GenAI applications that meet enterprise-grade requirements for performance, scalability, and reliability.

- Selecting and customising foundation models: fine-tuning, prompt engineering, and managing centralised model repositories

- Leveraging MLOps platforms to orchestrate model lifecycle management within application pipelines

- Infrastructure design for training and inference: optimising GPU/TPU workloads, storage, and compute pipelines

- Deployment and distribution strategies for GenAI models in production environments

- Setting and meeting system-level performance benchmarks to ensure end-to-end reliability and scalability

10:10 am - 10:40 am

PRESENTATION: Scaling Generative AI: Introducing GenAIOps at Expedia

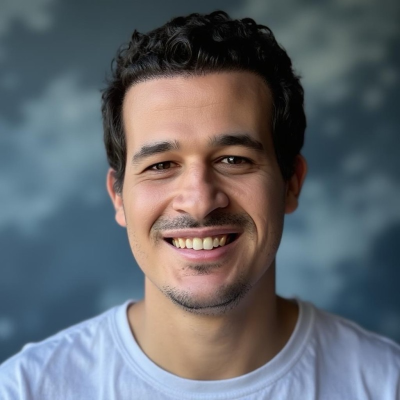

Hisham Mohamed -

Director of Generative AI Platform,

Expedia Group

As generative and agentic AI move from experimentation to enterprise scale, organisations need a new operational model to support them. Hisham Mohamed is taking his experience within Machine Learning to Generative AI, introducing GenAIOps; a structured approach to building, deploying, and managing generative AI systems reliably and efficiently. This session breaks down the infrastructure, tooling, and processes required to support production-grade generative AI - at scale and with control.

- What GenAIOps means and why it matters now

- Infrastructure patterns for scalable generative and agentic AI

- Automating deployment, monitoring, and iteration of large models

- Handling performance, cost, and governance in production environments

10:40 am - 11:10 am

Networking & Refreshments

11:10 am - 11:40 am

PRESENTATION: Driving Value With AI-Powered Software Engineering At TUI

Jie Zheng -

Technology Team Lead - Machine Learning Lab,

TUI

AI-assisted coding and software development tools are reshaping how engineering teams build and ship products. But beyond productivity gains, how do you turn AI into a strategic advantage and reframe software engineering as a commercial growth engine, not a cost center?

- Practical strategies for using AI in code generation to deliver business impact

- Mitigating risk: balancing autonomy and control with clear guardrails

- Overcoming internal resistance: addressing the learning curve and shifting team culture

- Communicating the value of AI-augmented engineering to business stakeholders

11:40 am - 12:10 pm

PRESENTATION: AI x Blockchain x Compute: Building Trustworthy, Scalable Intelligence

Deepak Paramanand -

Director Of Artificial Intelligence,

JPMorgan Chase & Co.

As AI models grow more powerful and compute-intensive, blockchain technologies are emerging as tools to help manage trust, transparency, and decentralised access to compute resources. This session explores how the convergence of AI, blockchain, and compute is set to reshape infrastructure strategies.

- Using blockchain to verify AI model provenance, usage, and audit trails

- Decentralised compute networks: unlocking distributed, low-cost GPU resources

- Tokenized incentives for data sharing, model training, and resource contribution

- Challenges in integrating blockchain with AI pipelines: latency, scale, and governance

- Emerging architectures enabling secure, transparent, and decentralized AI execution

12:20 pm - 1:20 pm

Networking Lunch

1:20 pm - 1:50 pm

PANEL DISCUSSION: Enabling AI-Ready Platforms and Building Valuable AI Products

Bryan Carmichael -

Director, Digital Innovation & Data Science,

Thermo Fisher Scientific

Floris Hermsen - Director Data Science & AI, Elsevier

Lasse Lund Sten Jensen - Head of Platform Orchestration, Novo Nordisk

AI foundations lie in robust, secure, and intelligent data platforms that can fuel innovation while preserving trust and control. In this panel, senior data and AI leaders will explore what it takes to architect enterprise-grade data environments that support high-impact AI products. From governance to business outcomes, this session will unpack the intersection of data architecture and product strategy in the age of AI.

- The critical role of high-quality, actionable data in powering GenAI and AI applications

- Strategies to secure, manage, and maximize the value of enterprise data while preserving privacy and compliance

- Building data platforms that make information findable, accessible, trustworthy, interoperable, and reusable (FAIR)

- Operationalising data governance at scale through automation, policy enforcement, and platform design

- How AI is reshaping product development and business models – and what that means for data architecture going forward

1:50 pm - 2:20 pm

PRESENTATION: Connecting Your LLMs to the Outside World with the Model Context Protocol

Peter Rees -

Lead Architect of Enterprise Data and AI/ML,

AP Moller

There is a critical need to build AI systems that enable LLMs and AI agents to interact with external data, tools, and services in a secure, scalable, and reusable way. During this session, Peter Rees will share lessons learned on their journey to embed Model Context Protocol across AI systems and agents.

- Architecting a scalable enterprise service, built for reusability and portability across workflows and domains

- Secure-by-design approaches for managing model input/output, data access boundaries, and traceability

- Sharing lessons learned from A.P Moller’s Maersk journey to mature AI agents through MCPs

2:20 pm - 2:50 pm

PRESENTATION: Operationalising AI: Infrastructure to Agents - Unlocking Business Value At Scale

Amit Nandi -

VP Solutions & Data Architect,

Barclays Investment Bank

As enterprises push beyond traditional Machine Learning towards autonomous AI agents, today’s MLOps platforms are being stretched to their limits. New demands - like managing prompt versioning, orchestrating agent behaviour, and ensuring governance across complex AI systems - signal the need for a new operational paradigm. In this session, Amit will share a practical vision for the next generation of AI Infrastructure, evolving enterprise architecture for the Agentic AI era. Drawing from his work building systems where humans interact with autonomous agents through LLM interfaces - and where domain-specific LQMs and specialized SLMs are coordinated by agents.

- Rethinking the five foundational layers of AI (Infrastructure, Data, Code, Models, and UI) to introduce AgentOps as a new discipline focused on orchestration, memory, and real-time feedback loops, offering a blueprint for scalable, human-aligned AI systems.

- Aligning technical foundations for scalable, agent-driven AI adoption

- Understanding where MLOps ends and LLMOps or AgentOps begins - and why it matters for your organisation

- What’s needed to support multi-agent systems with real-time collaboration and adaptation

- How can you future-proof your AI stack for human-in-the-loop and autonomous use cases#

2:50 pm - 3:20 pm

Networking & Refreshments

3:20 pm - 3:50 pm

PANEL DISCUSSION: Cloud, On-Prem, or Hybrid? Infrastructure Strategies for Enterprise AI at Scale

David Henstock -

Chief Data Scientist,

BAE Systems Digital Intelligence

Gabriel Cismondi - VP, Core Infrastructure Services Lead, JPMorgan Chase & Co

This panel brings together senior tech leaders to discuss frameworks for evaluating and architecting the right environment for your AI initiatives, covering:

- Trade-offs between cloud, on-premises, and hybrid deployments for training and inference workloads

- When to invest in dedicated GPU infrastructure vs. leveraging cloud-based AI services

- Real-world examples of enterprises shifting from cloud to on-prem to control GenAI costs

- Architectures and tooling for managing hybrid environments seamlessly (compute, data, orchestration)

- Financial modelling and cost control: balancing CapEx and OpEx while ensuring performance

3:50 pm - 4:20 pm

PRESENTATION: From Tools to Trust: Driving Innovation with Intelligent Data and a Complex AI Supplier Ecosystem

Floris Hermsen -

Director Data Science & AI,

Elsevier

This session from Floris Hermsen, Director of Data Science & AI at Elsevier, explores how to harness and align your existing supplier landscape to drive AI innovation. From integrating across disparate systems to ensuring consistent, high-quality data for intelligent processing, we’ll look at what it takes to turn complexity into competitive advantage.

- Strategies for integrating AI within a diverse supplier ecosystem

- How to align data standards and consistency across platforms

- Enabling intelligent data processing through existing partnerships

- Governance and quality control measures for AI and LLMs

- • Evolving from “human in the loop” to “AI as adjudicator” in complex workflows

4:20 pm - 4:50 pm

KEYNOTE PRESENTATION: Next-Generation AI Hardware Accelerators: Powering Performance at Scale

AI innovation is increasingly bottlenecked by compute. This session provides a deep dive into the latest AI accelerators reshaping enterprise infrastructure – Attendees will gain a practical understanding of:

- The performance characteristics and trade-offs between GPUs, TPUs, FPGAs, and custom silicon

- Aligning accelerator selection with specific AI workloads (training vs. inference, LLMs vs. vision models, etc.)

- Memory, bandwidth, and interconnect considerations for high-throughput AI pipelines

- Infrastructure implications – power, cooling, and rack design for high-density accelerator deployment

- Future trends in AI compute and what enterprise architects need to plan for now